Building an AI Data Analyst with LLMs - Part 2

Making the challenges less challenging.

This post is the second in a series where I talk about building an Open Source AI Data Analyst using LLMs. You can read the first part here.

If you’re familiar with python, you can follow along with my experiments on my Github repo.

Recap

In the first part of this series, we talked about the need for building an AI data analyst that you can talk to using natural language and ask questions about your business. The agent should then be able to respond with the queries, charts or dashboards that give you the insights you need to take decisions.

We then spoke about why data warehouses and platforms vary significantly from one organisation to the next and how that poses a problem when you’re trying to build a system that needs to understand that data in order to function. Now, let’s talk about how we can begin charting a way towards the solution.

Solution Design

Before we even get started thinking about how to design a solution, I’d like to share the philosophy that I tend to lean on when faced with a very large problem to solve.

Instead of solving the problem badly for everyone, you should try to solve it really well for a smaller set of people.

Which brings us to the next question, how do we select this smaller set of people? Ideally we’re looking for 2 things.

Some level of predictability in terms of code structure, the role of models and the relations between them.

Some documentation explaining what the models do.

Sizeable community, we want to solve for a smaller set of people but also need that set to not be too small.

Step 1. Picking a subset of the problem to solve

dbt is a data transformation framework that’s been gaining popularity for the last 3-4 years. In simple terms, dbt offers functionality on top SQL which allows you to build common warehouse structures like dimension tables with fewer lines of code, it keeps track of the lineage of all your metrics and provides the ability to document your models.

These functionalities not only make dbt a great tool to manage analytics code bases, it also meets all of the criteria for a subset of data engineers we can solve for.

It has a fairly large and active community

Somewhat predictable way of building model lineages

In built, column-level documentation capabilities.

Hence, I decided to build an AI agent that can learn about data warehouses built using dbt.

Step 2. Testing our hypothesis

Now that we’ve reduced the problem space to a more manageable size, let’s try and build an MVP and see how good of a job we’re able to do of responding to questions.

Now to build an MVP, we essentially need to build two systems.

Project Indexer

The first system is responsible for “learning” about the project. It does so by reading all of the available documentation and creating a knowledge base with everything it finds.

Look for all YAMLs in our project folder and its children

dbt documentation is stored in yaml files which can be located in any child directory of the project root folder.Parse YAML contents to extract documented models

These yaml files may also contain other information pertaining to the project, or unrelated data assets, so we parse all of the files to extract all documented models and convert it into a JSON representation.Convert this documentation into readable documents

We convert the model JSON into a readable explanation text document which can be eventually be used as a prompt in a Retrieval Augmented Generation mechanism.Store all of these documents in a vector store

We’ll convert all of these documents into vector embeddings and store them in a vector store for later retrieval

Question Answerer

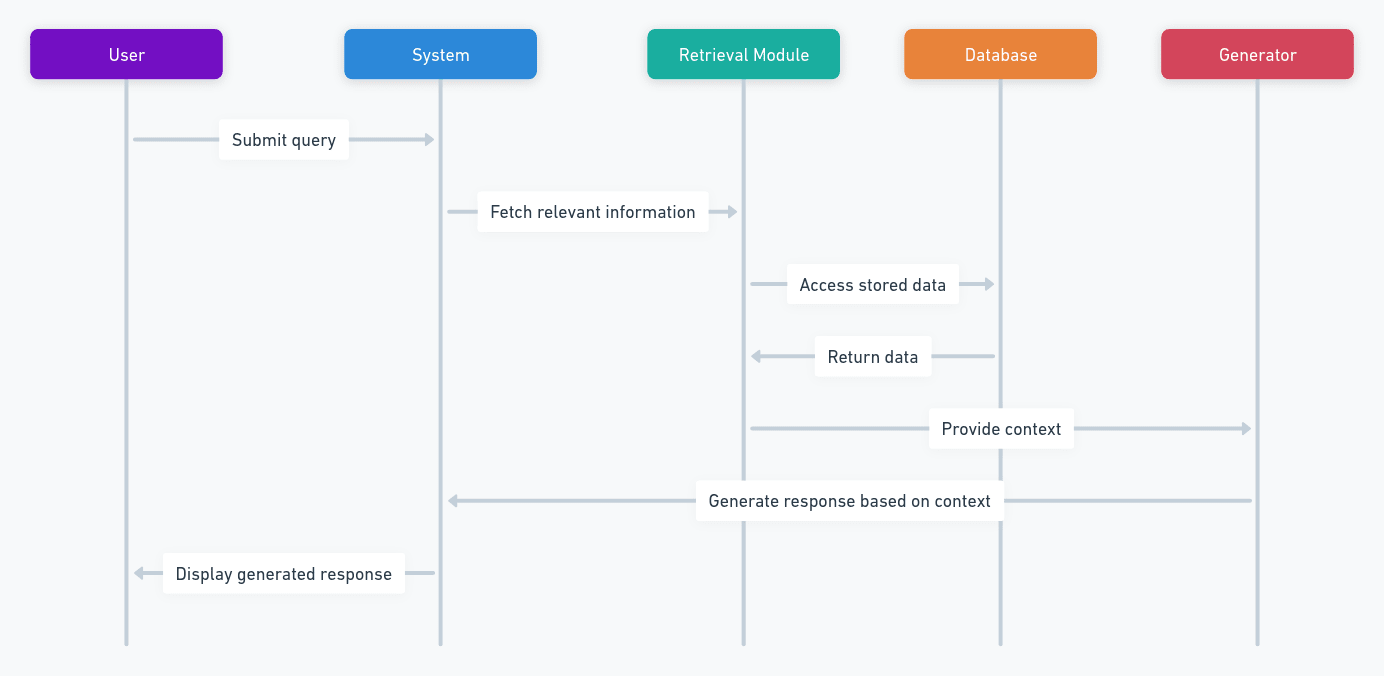

The second system uses Retrieval Augmented Generation (a.k.a RAG) to respond to questions asked about data. Every time a user asks a question, this system will:

Look for relevant information

We query the vector database with the question and retrieve the documents which are most similar to our question.Create a prompt

We compose a prompt using the question asked by the user and by adding the documentation text of the retrieved documents as knowledge that the LLM may use to answer the question.Optionally you can also add instructions to tune the kind of response you expect from the LLM

Generate a response

The prompt so created is used to generate a response which can then be returned to the user.

Next Steps?

So far, we’ve built a very basic system that’s capable of reading a dbt code base, storing information about models in a vector database and using that stored information to answer questions about data.

You can use the library directly inside of a jupyter notebook or interact with it using the streamlit interface provided.

However, there are still a few issues that need to be dealt with. These are:

The answers that the library provides are often very good, but they’re not perfect. They can sometimes miss out on edge cases, mis-interpret terms and LLMs still hallucinate.

Jupyter notebooks or streamlit interfaces are cool if you’re just prototyping some functionality but they’re hard to deploy at an organisation as-is.

Users may have security concerns while sharing their data with a new application.

We’ll talk about how I’m trying to solve for these issues in upcoming posts.